Overview

This article covers topics associated with the capture and transport of data used to populate a PortfolioCodeMRI report.

Setup

CodeMRI® Portfolio is reports are intended to display data across many software assets in a distributed fashion. When capturing data, Silverthread recommends one of two capture methods: local capture, in which source code is brought locally to the machine generating the Portfolioreport, and distributed, in which a secondary tool is used to generate portable metadata, which is then moved to the machine generating the Portfolioreport.

Local Capture

...

Figure 2: Workflow for Local Capture

The Local Capture case is the simplest configuration scenario. Identify and install CodeMRI® Platform on a VM or local workstation and scan the source code for all codebases to be included in the Portfolio. Once scans are complete the Datavault will be automatically updated with the latest results. Finally, select the software systems to be included in the Portfolio and run the accompanying job. A Portfolio report including all selected software systems will then be generated. See Using CodeMRI® Platform to Create a Portfolio for more detail.

Distributed Capture

Distributed Using CodeMRI® Scanner

...

Figure 3: Workflow for Distributed Capture

In today’s distributed environments it is sometimes difficult to gather actual source code into a single location to produce a Portfolioreport. The Distributed Capture case is designed to enable more complex organizations to quickly scan across remote locations without having to move the source code itself. In these cases an additional tool, the CodeMRI® Scanner can be distributed to many remote teams to capture descriptive data about a software system which may then be transported back to the central location at which the Portfolio is being generated. This approach lowers administrative overhead and does not require possibly complex network configurations to directly connect many remote sites.

...

Start a new instance of CodeMRI® Platform or use an instance already running connected to the DataVault into which the metadata will be transferred.

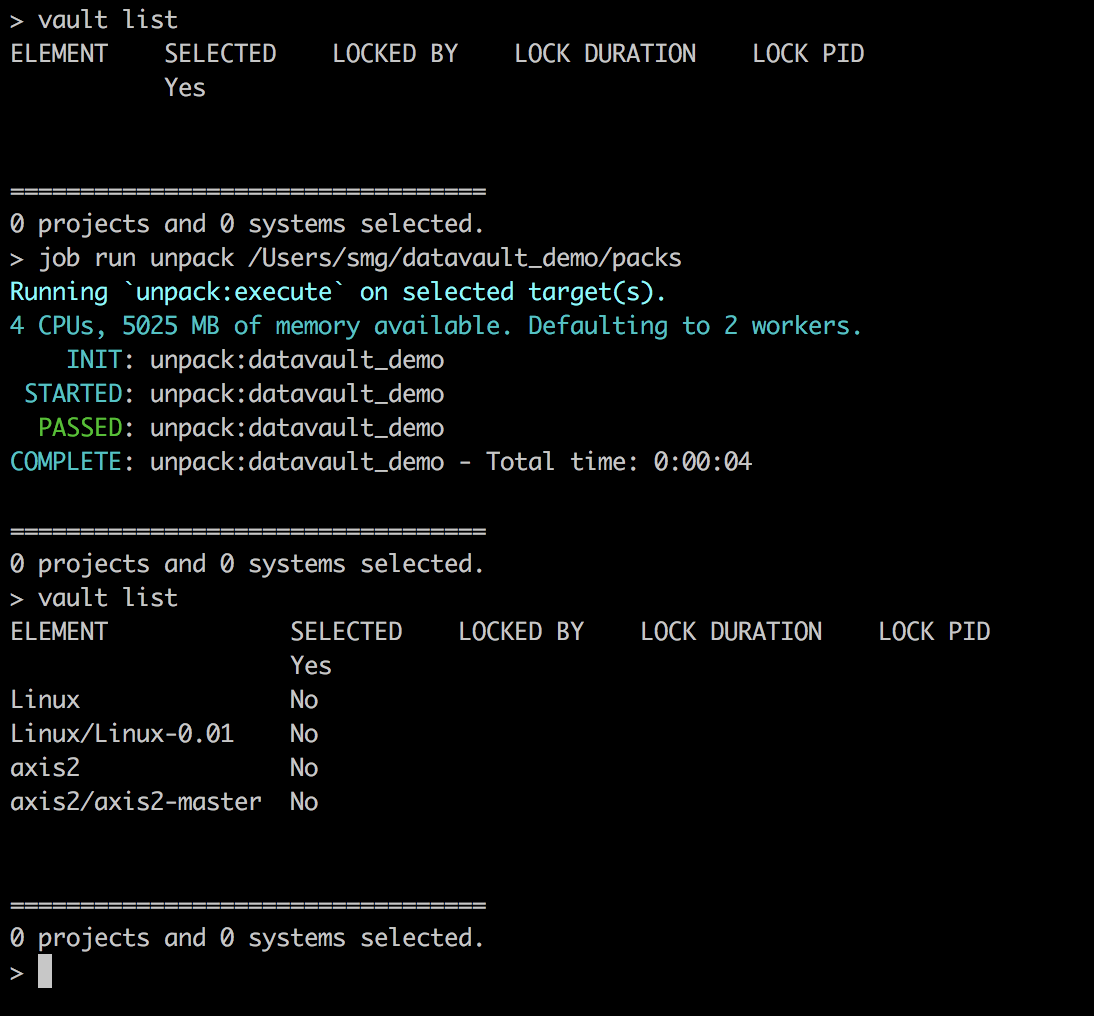

Run the “unpack” job passing in the path to the target Scanner metadata to add the scanned systems to the DataVault.

In this example we begin with an empty vault, then run the “unpack” job passing in the path to the pack containing the axis2 and Linux systems. Once complete these systems can now be seen in the new DataVault and are ready for processing.

Note: As demonstrated here, the “unpack” job may be passed a directory containing multiple packs which will be processed collectively.

Distributed Using CodeMRI® Platform

The CodeMRI® Platform is designed to allow portability of metadata between DataVaults. To transfer a system, or collection of systems, between two Datavaults:

Perform the scans as usual at the secondary location using CodeMRI® Platform.

Once complete, select all systems intended for transport and run the “pack” job.

In this example we have a DataVault populated by the axis2 and Linux codebases. A scan has already been completed for both systems. Running the “pack” job creates a zip of transportable data located in the “packs” directory located at the root of the DataVault.

These packed systems can now be transported to another DataVault at another site using any common mechanism (i.e. physical DVD, DropBox, email, etc.).Once the packed systems have arrived at the site where the Portfolio report is to be constructed, run the accompanying “unpack” job passing in the path to the target pack to add these systems to the new DataVault.

In this example we begin with an empty vault, then run the “unpack” job passing in the path to the pack containing the axis2 and Linux systems. Once complete these systems can now be seen in the new DataVault and are ready for processing.

Note: As demonstrated here, the “unpack” job may be passed a directory containing multiple packs which will be processed collectively.